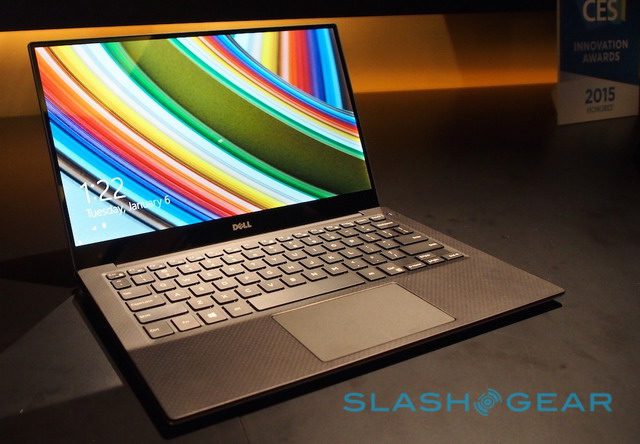

I’ve been meaning to post some thoughts on my new Dell XPS 13, but haven’t quite gotten around to it. What I did do tonight was type up a long comment that I posted over on PC World’s review of the XPS 13 (by none other than the legendary Gordon Mah Ung). Another fantastic review is by Lisa Gade of MobileTechReview (the photo below is courtesy of her review).

My comment/mini-review is below:

***

I now own the Dell XPS 13 – the top-end Core i7 QHD+ model with the 512 GB SSD. It’s quite a machine – Dell did an impressive job with the build quality and the overall package is impressive. It’s expensive though to get that top-end model. The 512 GB SSD upgrade alone was $300. Ouch! I’m ticked that Dell doesn’t allow us to truly customize what we want – I wanted the 1080p display but couldn’t get the 512 GB SSD without also getting the QHD+ display. Why does Apple offer more customization now than Dell? That’s just wrong.

Four main things irk me now:

1) The fact that the M.2 SSD isn’t PCIe and Dell told you they’re planning on releasing a version of the laptop that uses PCIe. What the hell? I just got this thing a few days ago, and it’s already going to be replaced by something newer? Is Dell taking PR lessons from Osborne?

2) Windows 8.1 is still a mess in high DPI mode. Well, to be fair, the OS itself isn’t too awful with the DPI scaling set to 250%, but apps are a mess. Blurry text in TweetDeck. Weird scaling and overlap of UI elements in all sorts of other apps. A magnifying glass in Lightroom the size of a grain of sand. It’s frustrating realizing I have to wait for Windows 10 to supposedly make this all better. Microsoft really wasn’t ready for laptops with screens quite this high-res…they should have been deprecating APIs and forcing developers to code for high-res displays, or found some way to auto-fix the issue.

3) The battery life is nowhere near what Dell claims. I’m used to OEMs being dishonest about real-world battery life, but we’re talking a 50% difference here. I’d say real-world usage of my XPS 13 in productivity and Lightroom (zero gaming) is about 6-7 hours. Good, but not great. And Dell announced great.

4) With all the rumours of Intel releasing Skylake this year, it feels like when Windows 10 comes out there will be a whole new generation of laptops, giving Broadwell U laptops a shelf life of maybe 6-8 months. There’s always something better around the corner, but the delays in Broadwell and the noise that Intel is already making about Skylake makes me concerned Broadwell U will be jumped over very quickly.

All in all, there’s a LOT to love here, but given that I only buy new laptops about every three years, I’m not sure this is the right one at the right time.